NVIDIA Audio2Face is a cutting-edge AI technology that automatically generates realistic 3D facial animations directly from an audio source — no manual keyframing or motion capture required. Designed as part of the NVIDIA Omniverse™ ecosystem, Audio2Face leverages deep learning models to synchronize lip movements, expressions, and emotions with spoken dialogue in real time.

What Is NVIDIA Audio2Face?

Audio2Face is an AI-driven facial animation tool that converts speech into expressive facial movements. It uses advanced neural networks trained on human speech and facial motion data to produce lifelike lip-sync and emotional expressions from any voice input.

Originally part of NVIDIA Omniverse, Audio2Face now also exists as an open-source version called Audio2Face-3D, which supports both pre-recorded audio and real-time streaming. The system can be integrated with 3D engines such as Unreal Engine or Unity, making it a powerful solution for animators, game developers, and virtual avatar creators.

“NVIDIA Omniverse™ Audio2Face is a combination of AI-based technologies that generate facial animation and lip sync driven only by an audio source.”

— NVIDIA Documentation

Key Features

🎙️ Real-Time Lip Sync

Audio2Face analyzes incoming audio to generate perfectly timed lip movements that match the spoken words, allowing for instant feedback and real-time animation.

😃 Emotion-Driven Expressions

The model doesn’t just move lips — it also captures emotional tone from speech, producing subtle facial expressions that reflect the speaker’s mood (e.g., happiness, anger, surprise).

🧠 AI-Powered Retargeting

Audio2Face supports character retargeting, enabling users to apply generated animations to their own 3D characters, regardless of the model’s topology or rig structure.

☁️ Cloud and On-Premise Deployment

With the Audio2Face-3D Microservice, developers can deploy the system on-premise or in the cloud, converting speech into ARKit-compatible blendshapes for use in a variety of rendering pipelines (NVIDIA Docs).

How It Works

- Input Audio — Provide a voice recording or live audio stream.

- AI Processing — Audio2Face’s neural network analyzes vocal features such as pitch, tone, and timing.

- Facial Animation Output — The system generates 3D blendshapes or animation data that can be applied to a digital character.

- Rendering — Use Omniverse, Unreal Engine, or another 3D engine to visualize the animated face.

This process allows creators to skip manual animation and focus on storytelling, character design, and production quality.

Getting Started with Audio2Face

To start using Audio2Face:

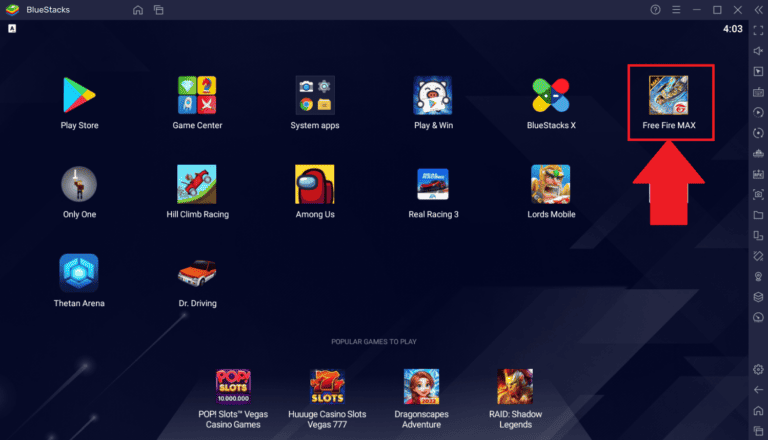

- Install NVIDIA Omniverse and the Audio2Face app.

- Load a sample character or import your own 3D model.

- Add an audio file or connect a live microphone input.

- Preview and export the generated animation as blendshapes or FBX data.

Developers can also explore the Audio2Face-3D open-source project on GitHub:

👉 NVIDIA Audio2Face-3D Repository

Use Cases

- Game Development: Rapidly create voice-synced character animations.

- Film & Animation: Speed up dialogue-driven scene production.

- Virtual Avatars: Power real-time digital humans for streaming or customer service.

- Metaverse Applications: Enable interactive, emotionally expressive avatars.

Why It Matters

Traditional facial animation is time-consuming and requires specialized skills. By automating this process, NVIDIA Audio2Face empowers creators to produce realistic, expressive characters at scale, reducing production time and costs dramatically.

As digital humans and AI avatars become more common in entertainment, marketing, and virtual collaboration, tools like Audio2Face are paving the way for a new era of AI-driven 3D storytelling.

Learn More

- Official NVIDIA Audio2Face Documentation

- Audio2Face-3D Microservice Overview

- Audio2Face-3D GitHub Repository

- Getting Started Video Tutorial (YouTube)

In summary:

NVIDIA Audio2Face is revolutionizing the way creators generate facial animation — transforming simple audio input into lifelike, expressive digital performances powered entirely by AI.